研究 & 開発

AR Echography

I've proposed using AR(Augmented Reality) technology to support echography by superimposing cross section and internal organs.

The shape of the internal organ and the probe position and angle previously recorded by a skilled physician are visualized. This system was confirmed to help unskilled physicians acquire echograms.

The cross section is superimposed onto the view of the physician wearing an HMD (HoloLens / Magic Leap). This is based on markerless tracking technology and real-time transmission of the probe position/angle and ultrasound images.

AR system for remote instruction of echography. Left image is the interface of remote doctor's side, and right image is the AR intereface for patient side. Instruction of prope operation was achieved only with visual information.

Dinamic body mark for echography. Procedure to acquire echogram is recorded/visualized by using volumetric video. This technology is not only for archiving medical skill but also remote collaboration in tele-medicine.

Wearable Motion Sensing

I've developed motion sensing system by using wearable sensors such as IMU, EMG and camera to support training of sports and rehabilitation.

IMU sensors and SLAM technology were used together for motion capture. IMUs are used for occlusion-free sensing of joint angles, and the SLAM sensor is used to track the spatial position of the human body. See also demo of full body tracking and sports sensing.

It was developed to make hand motion training in rehabilitation more engaging and enjoyable for patients. A virtual character moves forward while the patient’s arm is kept horizontal, and changes direction when the hand is bent to the left or right.

Collaborative Research

In addition to my own research, I also collaborate with research teams in the School of Medicine and in civil engineering.

In collaboration with the Fujibuchi Lab at Kyushu University, I developed a medical training application that visualizes invisible radiation distributions in AR and quantifies the exposure levels for medical staff based on their positions relative to radiation-emitting medical devices.

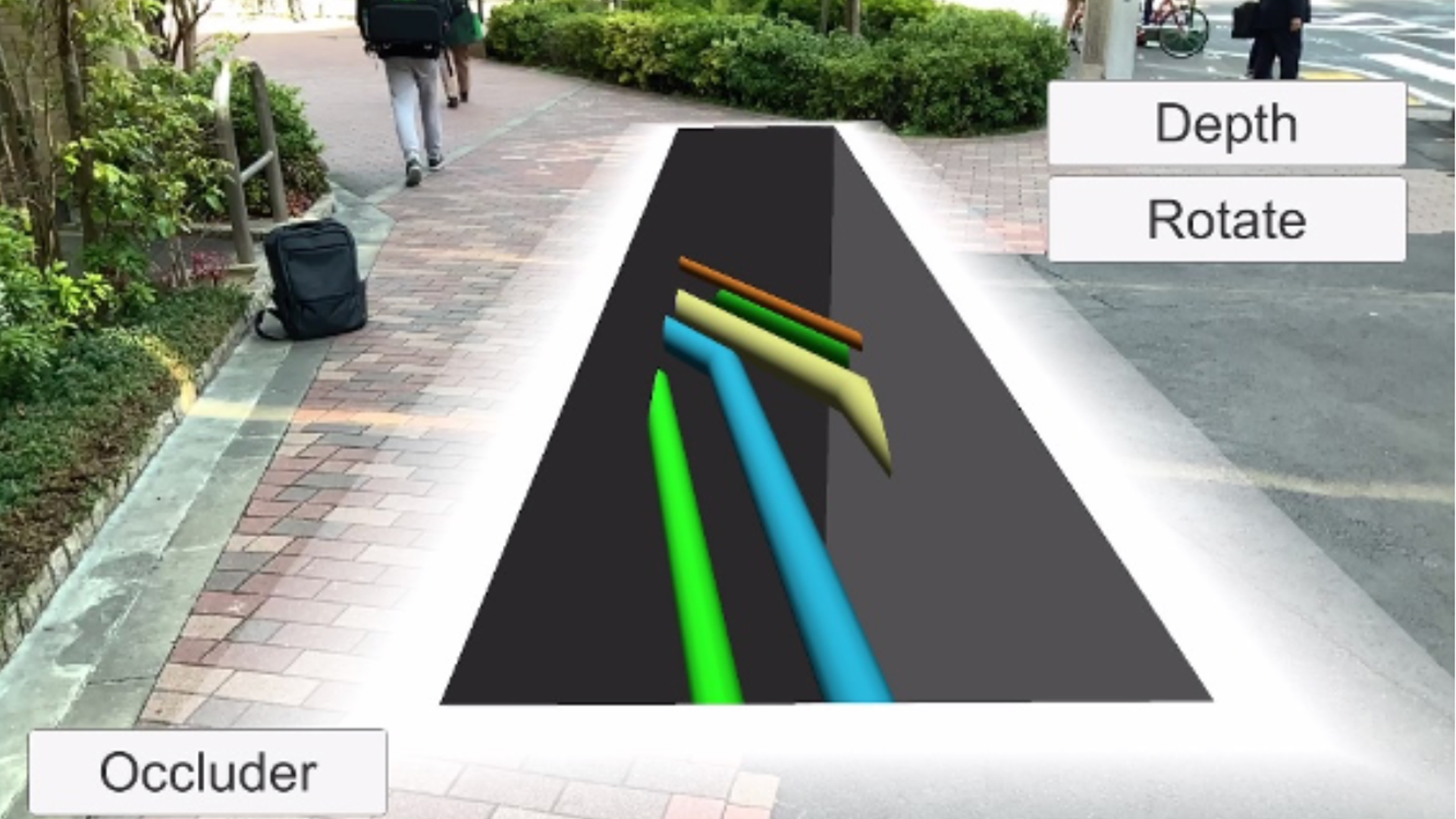

In collaboration with the Kashiyama Lab at Chuo University, I conducted research and developed applications that visualize underground utilities such as pipelines beneath roads using AR. This technology is expected to help prevent accidents during road construction.

趣味 & プロトタイピング

Project HoloBox: A perspective effect is achieved on an iPad without a dedicated 3D display. In addition, interaction between the virtual world inside the iPad display and AR devices such as HoloLens 2 is supported. Related works [Link]

Prototype of a system that synchronizes a real door with its portal representation. I believe that more advanced Mixed Reality experiences can be achieved by synchronizing real and virtual objects in this way.

StickAR is a Quest app that captures text from the headset’s camera view, uses AI to extract the characters, and lets you paste the result into tools like Google Docs. It works like an AR sticky note, letting you quickly grab handwritten or real-world text without using a virtual keyboard.

A prototype on Meta Quest that reflects real-world lighting conditions. Information about the measured positions and shapes of real objects, which is necessary for representing lighting intensity and shadows, is transmitted to the Quest via WebSocket or WebRTC..

Exploring depth data on Meta Quest 3, I enabled masking for virtual spray painting by using real-world objects as mask geometry. By leveraging the headset’s depth information, virtual spray patterns can accurately respect the shapes and positions of physical items in the environment.

HoloTuberKit. This system enables us to broadcast point cloud through internet and to visualize it on XR devices. Point cloud viewer is compatible with HoloLens, Nreal Light, Meta Quest and ARCore device. You can down load these apps from GitHub.[Download]

Remote communication system based on volumetric video streaming and AR/VR technology. Users can communicate remotely while sharing and manipulating virtual objects (2D images, videos, and 3D models). In this demonstration, Nreal Light was used on both sides.

Tried to create AR fireworks. An iPad was used as a controller to place and adjust the origin of the fireworks in the real space, while HoloLens 2 was used as the AR viewer. This setup allows users to intuitively control where the fireworks appear and experience them seamlessly integrated into the environment.

Sending digital data to AR space by shaking a smartphone. A shaking gesture detected by the smartphone’s accelerometer is used as a trigger to send 2D/3D images to HoloLens. This system was developed inspired by SF movies.

A prototype system that transfers 3D objects scanned by a smartphone to XR devices. This system enables sharing 3D objects with people in remote locations, as long as there is an internet connection.

Seeing a remote environment through a finger frame. Leap Motion is used to detect the gesture and adjust the size of a window displayed as an AR image on Meta 2. Please also see our related work with this system. [Link]

DejaViewer: A prototype system that uses the Meta Quest 3’s depth data and color camera input to instantly record and replay the shape and motion of objects in front of the user.

Test development of a half-mirror AR system and interaction between a virtual character and the user’s hand.

This style of AR makes it easier for users, especially children, to experience optical see-through AR without wearing an HMD.

Spatial Clipper. This system can extract a spatial mesh within a clipping sphere. It can also transmit the extracted mesh to other devices [Demo]. Using this approach, hands-free scanning and sharing of spatial geometry with remote users can be achieved.

AR shooter using HoloLens 2 and an EMG sensor. A virtual bullet is fired when the user strongly clenches their hand. Wearable sensors such as EMG enable new types of interaction that cannot be achieved using image processing alone.

Making a flat wall into a touch panel using depth and image processing techniques. This demonstrates an interactive transformation of a virtual peephole that allows users to see into the next room. The image of the next room is captured by a web camera.

3D objects are generated using ChatGPT and displayed in AR.

Instead of using a virtual keyboard for prompt input, this system utilizes AI-based character recognition, enabling more intuitive and seamless interaction.

Sharing an AR experience among multiple users and devices.

In this video, not only virtual objects but also user operations are shared between HoloLens users and a smartphone that records the scene, using bidirectional communication.